AI

AI Can Now ‘See’ Optical Illusions — And What It Reveals About…

AI Learns to See What Isn’t Really There Artificial intelligence has reached a surprising new milestone: it can now “see” optical illusions in ways that closely resemble human perception. According to a recent exploration published by BBC, advanced AI vision systems are no longer just identifying objects accurately, but are also falling for visual tricks […]

AI Learns to See What Isn’t Really There

Artificial intelligence has reached a surprising new milestone: it can now “see” optical illusions in ways that closely resemble human perception. According to a recent exploration published by BBC, advanced AI vision systems are no longer just identifying objects accurately, but are also falling for visual tricks that have puzzled neuroscientists and psychologists for decades. This development is not merely a novelty. It opens a new window into how both machines and human brains interpret the world.

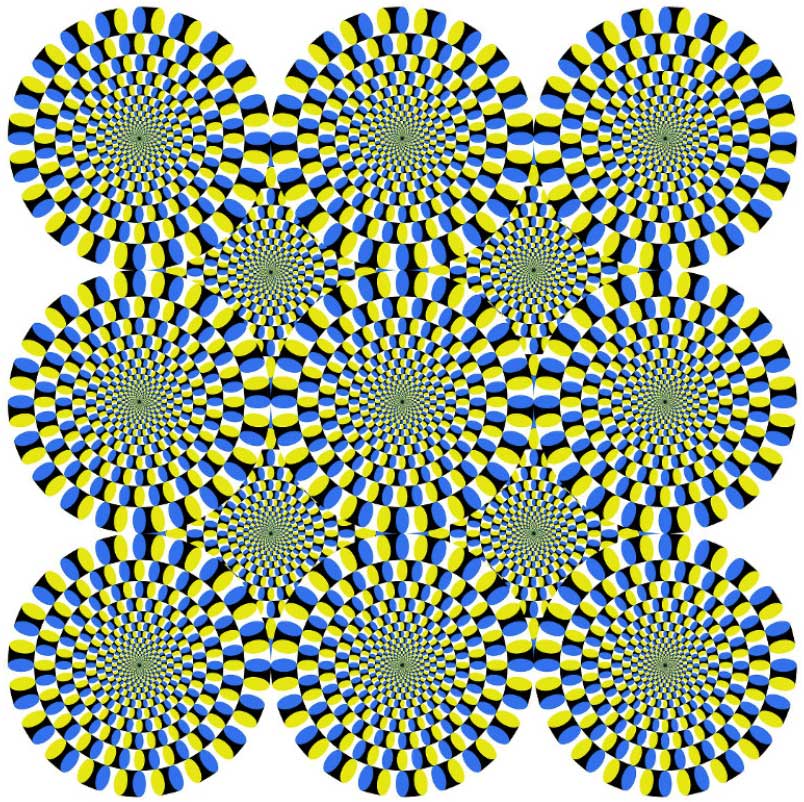

Optical illusions have long been used to study perception because they reveal a fundamental truth: seeing is not the same as recording reality. When AI systems begin to misinterpret images in the same way humans do, it suggests that perception—whether biological or artificial—relies on similar shortcuts, assumptions, and predictive mechanisms.

Why Optical Illusions Matter to Science

Optical illusions are powerful scientific tools because they expose the brain’s inner workings. Humans do not passively absorb visual information. Instead, the brain actively constructs reality based on incomplete data, past experience, and expectations. Illusions exploit this process, causing us to see motion where there is none, colors that don’t exist, or shapes that defy physical rules.

For decades, scientists believed these errors were uniquely human, tied to biological neurons and evolutionary adaptations. The fact that AI systems—built from code and mathematics—can now experience similar perceptual distortions challenges that assumption. It suggests that illusion is not a flaw of biology, but a consequence of intelligent interpretation itself.

How AI Vision Systems Actually ‘See’

Modern AI vision relies on deep neural networks trained on vast datasets of images. These systems do not “see” in the human sense. They process pixel patterns, detect edges, infer depth, and classify shapes based on probability. Yet when exposed to optical illusions, many AI models respond in ways strikingly similar to humans.

This happens because both AI and human vision systems face the same fundamental problem: the visual world is ambiguous. A two-dimensional image must be interpreted as a three-dimensional scene. To solve this efficiently, both systems use assumptions—about lighting, perspective, continuity, and motion. Illusions exploit these assumptions, leading to predictable misinterpretations.

When Machines Fall for the Same Visual Tricks

Researchers have found that certain AI models misjudge size, brightness, and motion in classic optical illusions. In some cases, the AI’s “mistake” mirrors human error almost perfectly. For example, illusions that rely on contrast can cause AI to perceive false boundaries or exaggerated differences, just as humans do.

This convergence is significant. It indicates that perception errors are not random. They emerge from the logic of interpretation itself. When an intelligent system tries to make sense of limited data quickly, it will sometimes be wrong in consistent, explainable ways.

What This Reveals About the Human Brain

The fact that AI can replicate human perceptual errors suggests that our brains operate less like cameras and more like prediction engines. The brain constantly guesses what it is seeing, updating its interpretation as new information arrives. Optical illusions are cases where those predictions overpower raw sensory input.

AI models that exhibit similar behavior help scientists test theories about human cognition. If a specific network architecture produces the same illusion response as humans, it strengthens the idea that the brain may use comparable computational strategies, even if implemented biologically rather than digitally.

Perception Is About Prediction, Not Reality

One of the most important insights from AI and optical illusions is that perception is not about truth, but usefulness. The brain evolved to make fast, efficient judgments that usually work well enough for survival. Seeing shadows as depth or assuming light comes from above is generally helpful, even if it occasionally leads to illusion.

AI systems trained to optimize accuracy and efficiency develop similar biases. They learn what usually works, not what is always correct. This parallel helps explain why illusions are so persistent and universal across cultures and even across species.

Why AI Doesn’t Always See the Same Illusions

Interestingly, not all AI systems fall for the same illusions humans do. Some models are immune to certain visual tricks, while being vulnerable to others that humans barely notice. This difference is just as informative as similarity.

It shows that perception depends heavily on architecture and training. When AI behaves differently from humans, it highlights which aspects of human vision are learned through experience and which may be hardwired through evolution. These differences help researchers isolate the unique features of biological perception.

Illusions as a Diagnostic Tool for AI

Optical illusions are now being used to test and refine AI vision systems. By analyzing how and why a model misinterprets an image, engineers can better understand its internal decision-making processes. This is particularly important for applications like autonomous driving and medical imaging, where perceptual errors can have serious consequences.

Illusions act as stress tests, revealing hidden assumptions and vulnerabilities in AI systems. They expose where models rely too heavily on context or fail to adapt when expectations are violated.

What This Means for Trusting AI Vision

As AI systems become more integrated into daily life, understanding their perceptual limitations becomes critical. If AI can be fooled by illusions, it can also be misled by unusual lighting, unexpected angles, or adversarial images. Recognizing this helps set realistic expectations about what AI can and cannot reliably do.

The lesson is not that AI vision is weak, but that it is interpretive. Just like humans, AI systems are not infallible observers of reality. They are intelligent guessers, shaped by training data and design choices.

AI as a Mirror for Human Cognition

One of the most fascinating outcomes of this research is how AI has become a tool for studying ourselves. By building systems that approximate human perception, scientists gain a controlled environment to test cognitive theories that are difficult to examine directly in the brain.

AI allows researchers to manipulate variables, architectures, and learning conditions in ways impossible with human subjects. When AI behaves like us, it validates certain models of cognition. When it behaves differently, it challenges assumptions and sparks new questions.

Rethinking Intelligence and Perception

The ability of AI to experience optical illusions forces a broader rethink of intelligence. Intelligence is not about perfect accuracy. It is about making sense of the world under uncertainty. Errors are not bugs; they are side effects of efficiency.

Human perception, long viewed as uniquely rich and subjective, may share deeper common ground with artificial systems than previously thought. This does not reduce the mystery of the brain, but reframes it in computational terms.

Implications for Neuroscience and Psychology

For neuroscientists and psychologists, AI provides a powerful new experimental partner. Instead of relying solely on behavioral experiments and brain imaging, researchers can now compare human perception directly with artificial models that can be inspected line by line.

This could accelerate understanding of conditions where perception differs from the norm, such as visual disorders or hallucinations. By seeing how AI breaks, scientists may better understand how human perception can break—and how to fix it.

The Philosophical Question: What Is Reality?

At a deeper level, AI and optical illusions raise philosophical questions about reality itself. If both humans and machines consistently misinterpret the same images, what does that say about objectivity? It suggests that perception is always mediated, shaped by the observer’s internal model of the world.

Reality, as experienced, is not a direct imprint of the external world, but a constructed interpretation. AI, unintentionally, is helping make that abstract idea concrete.

Why This Research Matters Now

The timing of this discovery is important. AI systems are rapidly moving from labs into society. Understanding their perceptual biases is essential for safety, ethics, and design. At the same time, neuroscience is increasingly embracing computational models to explain cognition.

The intersection of AI and optical illusions sits at this crossroads, offering insights that benefit both fields. It demonstrates how progress in artificial intelligence can illuminate fundamental questions about the human mind.

A Future Where AI Helps Decode the Brain

As AI models grow more sophisticated, their similarities to human cognition may deepen. Future systems could help simulate not just perception, but attention, memory, and emotion. Optical illusions are just the beginning.

By studying where AI succeeds and fails, scientists may unlock new understanding of how the brain constructs experience. In this sense, AI is not replacing human insight, but amplifying it.

Conclusion: When Machines Teach Us How We See

The fact that AI can now “see” optical illusions is more than a technical curiosity. It is a reminder that perception—human or artificial—is an active process shaped by assumptions, predictions, and context. When machines make the same mistakes we do, they reveal the hidden logic behind our own experience.

Rather than diminishing human uniqueness, this discovery highlights the elegance and efficiency of the brain’s design. It shows that intelligence, at its core, is about navigating uncertainty. In helping us understand how machines see illusions, AI is also helping us see ourselves more clearly than ever before