AI

Leaker Suggests Future iPhones Could Get Multispectral Cameras — A Major Evolution…

A new leak suggests Apple may be exploring multispectral imaging technology for future iPhone cameras, a shift beyond traditional RGB sensors that could dramatically enhance computational photography, material detection, and Apple’s Visual Intelligence. While still in early evaluation within Apple’s supply chain, this development hints at a potentially significant leap in how iPhones capture and […]

A new leak suggests Apple may be exploring multispectral imaging technology for future iPhone cameras, a shift beyond traditional RGB sensors that could dramatically enhance computational photography, material detection, and Apple’s Visual Intelligence. While still in early evaluation within Apple’s supply chain, this development hints at a potentially significant leap in how iPhones capture and interpret visual data. (AppleInsider)

Introduction

Apple’s iPhone camera has long been a benchmark in smartphone imaging, thanks to cutting-edge sensors and powerful computational photography. According to a recent report from 9to5Mac, renowned Weibo leaker Digital Chat Station claims future iPhones may adopt multispectral camera technology — offering capabilities far beyond the standard red/green/blue (RGB) imaging used today. (9to5Mac)

This rumor has sparked attention across the tech community, as multispectral imaging could signal a more advanced era of photography and on-device intelligence in mobile devices.

What Is Multispectral Imaging and Why It Matters

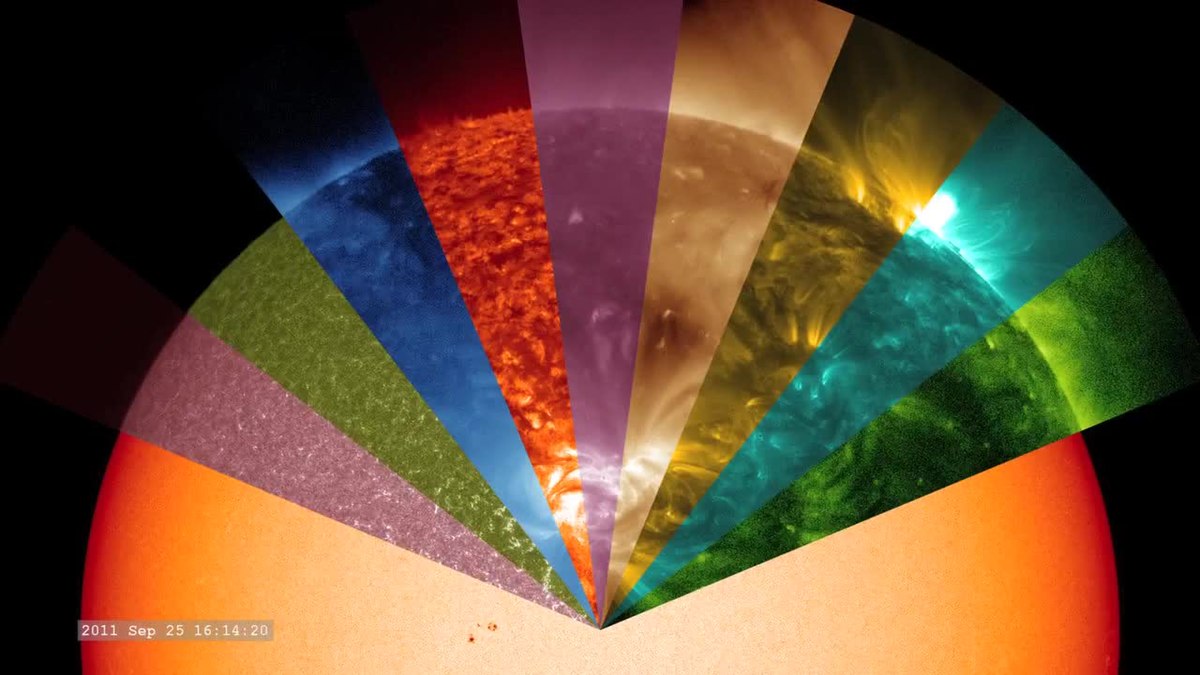

Traditional smartphone cameras capture light in three color channels — red, green, and blue — that mimic human vision. In contrast, multispectral cameras capture light data across multiple wavelengths, potentially including near-infrared and other narrow bands, enabling deeper analysis of a scene’s physical properties. (AppleInsider)

This is why multispectral imaging is widely used in industrial, scientific, and agricultural applications, where accurate material differentiation and detection beyond visible light are crucial. In the smartphone realm, such technology could bring new levels of detail and understanding to images. (MacRumors)

How Multispectral Cameras Could Transform iPhone Photography

If Apple successfully implements multispectral sensors into future iPhones, the implications could include:

1. Improved Material Detection and Scene Understanding

Multispectral data allows cameras to distinguish how different materials reflect light across wavelengths, helping the device more accurately identify subjects like skin, foliage, or fabric — which could refine portrait effects and object recognition. (MacRumors)

2. Enhanced Computational Photography

With richer spectral data, Apple’s camera algorithms could produce cleaner images in complex lighting conditions, improving dynamic range, detail, and noise reduction — particularly in low light.

3. Boost to Apple’s Visual Intelligence

Apple’s AI and machine learning features, branded under Apple Intelligence, could leverage multispectral input to better analyze scenes, improving depth mapping, object tracking, and semantic recognition beyond what RGB cameras can achieve.

Technical Challenges and Industry Context

Despite its promise, multispectral imaging presents several challenges:

- Complex sensor design: Integrating multiple wavelength detection within the slim iPhone chassis could complicate internal hardware layouts. (Android Headlines)

- Increased production costs: More advanced sensors and processing hardware typically raise manufacturing expenses. (Android Headlines)

- Space constraints: Smartphone design prioritizes thinness and compact components, making hardware expansion difficult without trade-offs.

Moreover, while multispectral technology has appeared in niche smartphones (for example, in select Huawei handsets), it has not yet seen widespread consumer adoption or overwhelmingly positive reviews — signaling that Apple’s approach would need refinement for mainstream use. (9to5Mac)

What the Leaks Really Reveal (and What They Don’t)

The core source of this speculation — Digital Chat Station — reportedly notes that Apple is evaluating multispectral camera components among suppliers, but formal testing has not yet begun. This suggests the technology remains in early stages and may not appear in immediate iPhone releases. (MacRumors)

At the same time, other related leaks hint at camera upgrades such as variable aperture lenses and larger telephoto apertures in upcoming models like the iPhone 18 Pro, though these are separate from multispectral sensor development.

How This Fits Into Apple’s Long-Term Imaging Strategy

Apple historically prioritizes imaging improvements that offer clear benefits to users — whether through advanced software enhancements like Deep Fusion or hardware refinements like sensor-shift stabilization. If multispectral cameras become viable, they could represent the next frontier in computational photography and machine vision for iPhones.

However, analysts and insiders caution that such technology may still be years away, as Apple typically conducts extensive internal validation before mass-producing new innovations.

Conclusion

The rumor of multispectral cameras in future iPhones — while unconfirmed — offers an exciting glimpse into what next-generation smartphone imaging might look like. Beyond incremental upgrades, this technology could empower iPhones to see and interpret the world in richer, more detailed ways than ever before.

As with all early leaks, the timeline and actual implementation remain speculative. Still, if Apple pursues multispectral imaging seriously, it could redefine the smartphone camera experience and intensify competition across the industry.

Key Highlights

- Apple is reportedly evaluating multispectral imaging technology for future iPhones, according to supply-chain leaks. (MacRumors)

- Multispectral cameras capture data beyond visible light, enabling advanced material detection and smarter image processing. (AppleInsider)

- The technology is still in early exploratory stages and unlikely to appear immediately in upcoming models. (MacRumors)

- Challenges include cost, complexity, and integration into smartphone form factors. (Android Headlines)