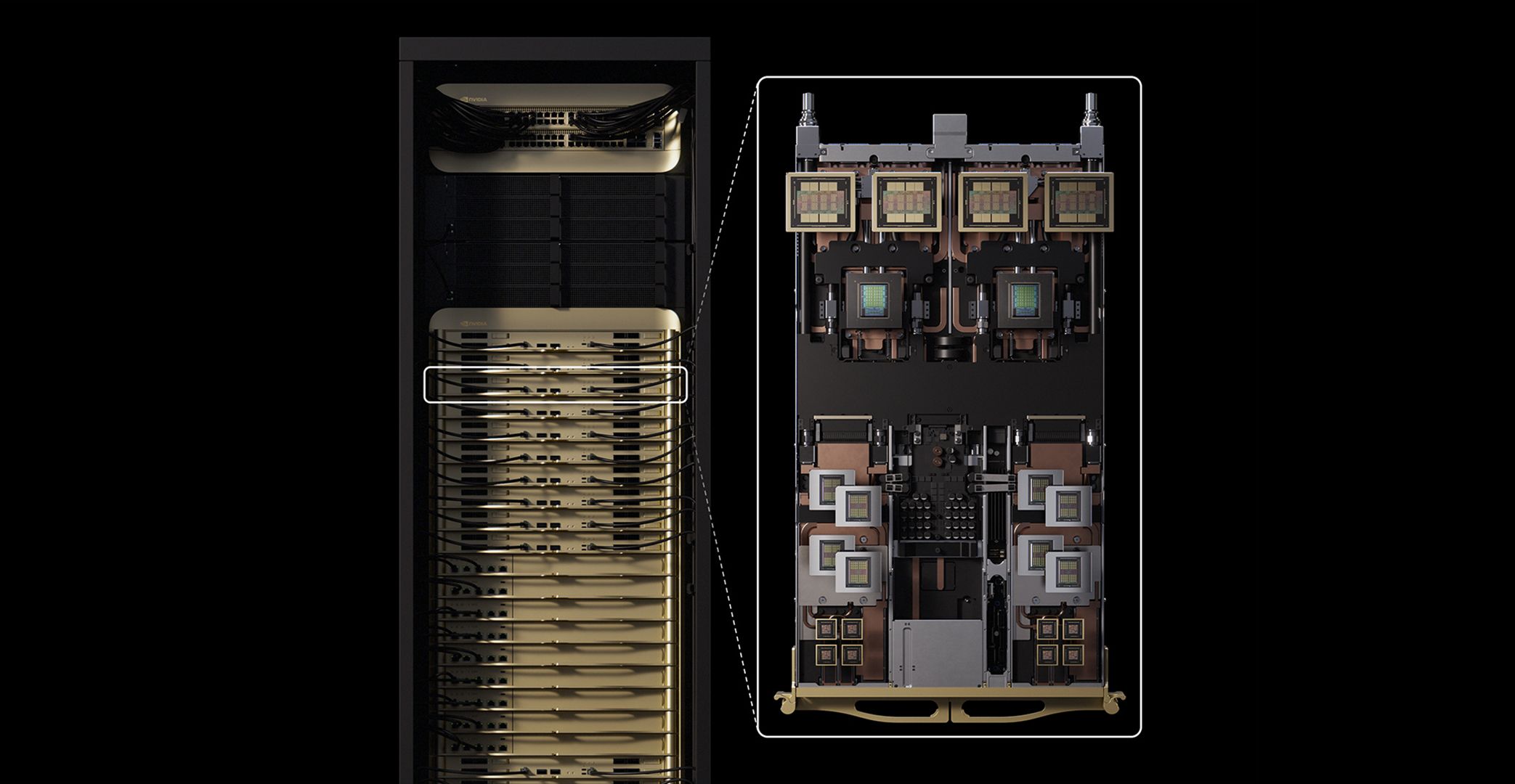

Data center GPUs from Nvidia have become the gold standard for AI training and inference due to their high performance, the use of HBM with extreme bandwidth, fast rack-scale interconnects, and a perfected CUDA software stack. However, as AI becomes more ubiquitous and models are becoming larger (especially at hyperscalers), it makes sense for Nvidia to disaggregate its inference stack and use specialized GPUs to accelerate the context phase of inference, a phase where the model must process millions…

Hardware

Nvidia’s new CPX GPU aims to change the game in AI inference — how the debut of cheaper and cooler GDDR7 memory could redefine AI inference infrastructure

Continue Reading